The featured image of this blog post is based on a picture by JL G on Pixabay

If you are old enough, you probably remember the Y2k bug. Now, mankind should have learned from it, right? Wrong!

What was the Y2K bug?

For those of you, who are too young, here is the story. In the good old days of computing, that is 1960-1990, electronic memory was so expensive that programmers used every trick to save a few bytes. For this reason, it was common practice to use only the last two digits of the year to represent a date in the computer. Instead of storing 31.03.1975, one stored simply 31.03.75.

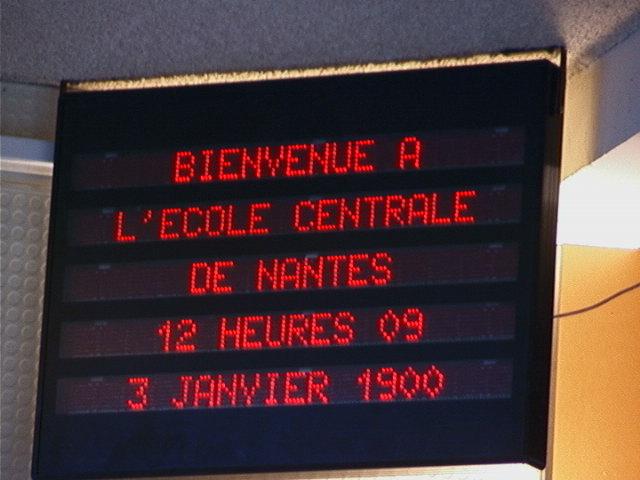

Approaching the end of the last century, computer scientists started to notice that this may lead to problems in the year 2000 because instead of 2000, most programs will assume that it is all of a sudden 1900 again and people have a negative age. Maybe even airplanes might fall out of the sky, the banking system will crash, and nuclear missiles will be launched. For these reasons, a large number of programmers had a lot to do to find all these year-2000 (or for short Y2K) bugs and correct them, using now 4 digits to represent the year. And finally, as we all know, the apocalypse did not happen, although there were some glitches.

Lessons learned

On the technical side, we have learned that it is a stupid idea to save two digits when storing a date. All in all, the effort for trying to fix this problem is quantified as roughly 400 billion US-$ worldwide. As usual, some people claim that it was all a waste of money because nothing happened, while others believe that the apocalypse was avoided precisely because we spent that much money.

However, the main point is certainly, that we learned not to repeat this stupid mistake. This is what one would think, at least. Indeed, on desktop computers and on the web, in the cloud, and you name it, one usually deals now with a full date specification.

Lessons ignored

I am currently into real-time clocks (RTCs), because I have a lot of time (pun intended). What amazes me is that in almost all RTCs that are currently on the market, time is stored using only the last two digits of a year. This is not a problem yet. However, eventually (in 80 years), systems using these RTCs will hit the same wall as the systems I mentioned above. Worse, because these are mainly embedded systems, nobody will have an idea how to fix them.

Given all the problems we currently face, this might be the most neglectable one. And at some point in 2087, the European Union will probably rule that all RTCs need to store at least 3 digits of the current year. And with that, we will avoid the Y2.1K apocalypse. Much more likely, though, is the scenario that some political party stops this initiative because it might hurt the industry, and only in 2098, people start to worry about what will happen to all their smart gadgets in two years’ time. The electronic industry has a field day because everybody has to buy a new smartphone, hololens, flying autonomous car, you name it.

As mentioned, this will not turn into a problem for my generation or the generation of my kids. However, it is completely baffling to me, why the engineers who design new RTCs still stick to the scheme from the beginning of computing in the 1960s. Two additional digits would fit easily into one byte, and the implementation of the additional leap year rule is also no rocket science. Even better, why not use a Unix time counter? The reason for not doing this cannot be the 1/1000 of a cent saved by having one byte of storage and a minimal amount of other electronics less.

Let me finally mention two RTCs, which head in the right direction. The RV-3028 by Micro Crystal contains an unsigned 32-bit Unix time counter. With the Unix convention that time started 1.1.1970 at 00:00, which will bring you to 2106 before a rollover of the counter might lead to confusion. However, one can base such a counter on any time point and by that make sure that the system will work until wide into the future. The other RTC is the MCP 79410 by Microchip, which at least gets the leap year rule for 00 years right until 2399, while all the others already give up at the end of 2099 (guess why).

A simple cure

My suggestion for new RTC designs would be to implement only a Unix time counter. Maybe make it compatible with current-day computers and embedded systems, and spend 8 bytes for the counter. By the way, one could implement it by leaving the upper three bytes as constant zero and leaving the extension of using these upper three bytes to future generations. The alarm function could also be reduced to a single 8-byte register. Any recurring alarm could be implemented by the embedded system.

Most often, the embedded system will work with such Unix time representations anyway, and it makes it very easy to compare times, compute intervals, and the like. For the conversion of Unix time into time/date representation, there are standard libraries on every platform. In other words, it would simplify the design of the RTC and of the interface software, and it would provably prohibit the return of the Y2K bug. Then we would never ever again have to worry how to represent time, and our embedded systems will even continue to work correctly after the universe has ceased to exist. Doesn’t that sound like the ultimate sales pitch?

Leave a Reply